The Deal of the Century

Executive Summary

(This 2700-words Executive Summary follows on from a 700 words Introduction to the Deal of the Century webpage)

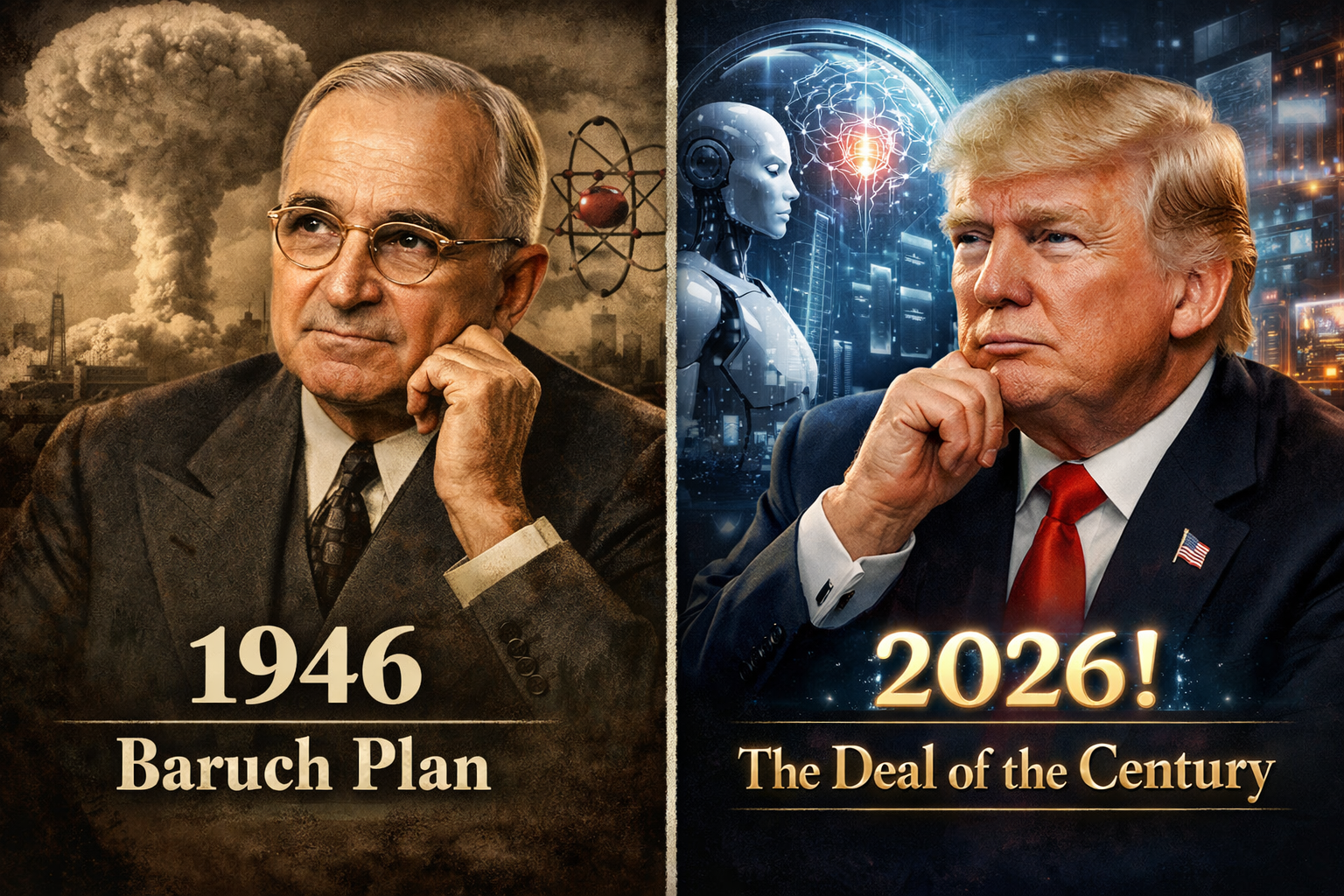

There is still a timely path to convince President Trump, on purely pragmatic grounds, to co-lead with President Xi the boldest treaty in human history: a global agreement to prevent AI's immense risks and realize its astounding potential. This is The Deal of the Century.

Who We Are

The Coalition comprises 10 international NGOs and 40+ exceptional advisors and team members—including former officials from the United Nations, National Security Agency, World Economic Forum, UBS, Yale, and Princeton—plus 24 contributors to the Strategic Memo. It was conceived and it is led by Rufo Guerreschi.

Launched in July 2024, the Coalition has activated over 2,100 hours of professional pro-bono work. Seed-funded by Jaan Tallinn's Survival and Flourishing Fund and Ryan Kidd since February 2025. (See team and contributors)

Five Core Treaty Objectives

The goal of our The Deal of the Century is not to persuade influencers and Trump to pursue any treaty or any treaty-making process for AI.

Success in persuading Trump, key influencers, other key world leaders, and stakeholders - and maximizing chances of a positive treaty outcome - requires that an AI treaty should maximize the following objectives in a timely, reliable, and durable way:

(1) Ban ASI and prevent catastrophic AI misuse, in civilian and military domains

(2) Foster safe AI and scientific innovation and deployment leading to a safe, humanist, widely shared, reasonably cautious, and optimistic pathway to a human-first flourishing of all sentient beings.

(3) Secure and future-proof China’s and the US’s economic leadership, to a certain degree, and ensure each against the overwhelming dominance of the other

(4) Secure and future-proof top AI firms’ valuation and innovation agency, to a certain degree, to ensure freedom of innovation of private individuals and citizens, within wide safety constraints to a certain degree

(5) Reduce durably global concentration of power and wealth, while making every person and nation substantially or radically better off, due to the abundance that governed AI will bring.

Three Core Treaty-Making Requirements

Maximizing those five treaty objectives requires a treaty-making process radically different from the models that failed on nuclear weapons and climate. Five design features are essential:

(a) Bold, timely, federal, and self-correcting — because the window is measured in months, not decades, and because excessively centralizing AI governance globally would be both impossible and dangerous. Decisions should default to the lowest possible level (subsidiarity), escalating to global coordination only for the most dangerous capabilities.

(b) A two-stage roadmap:

Stage 1. A US-China leadership framing an emergency temporary agreement to ensure deep reciprocal transparency, freeze the gravest risks and build jointly, at wartime pace, mutually-trusted diplomatic communications and treaty enforcements mechansims;

Stage 2. a realist constitutional convention on AI, to prevent a fatal veto, "realist" in that GDP-based vote weighting reflects huge power asymmetries, while preventing a duopoly, and ensuring subsidiarity. Inspired by the 1787 US Constitutional Convention, as suggested by Sam Altman in 2023.

(c) Formal roles for security agencies, top AI labs, key spiritual traditions, top AI scientists, and citizens assemblies — because politicians alone lack the technical knowledge, operational expertise, moral authority, and democratic legitimacy the scope of this treaty demands.

Our Predicament

For good and bad, our predicament is shockingly stark. It is laid bare in front of our eyes, yet few have the clarity and courage to face it. A declared, ongoing winner-take-all race to Artificial Superintelligence (ASI) is fast bringing humanity to a three-way fork on the road:

(a) catastrophic loss of control over AI, risking extinction or AI takeover

(b) authoritarian capture—an immense, unaccountable concentration of power

(c) humanity's triumph, via a reliably and durably expert, participatory, federal and open global governance of AI

Middle outcomes are highly unlikely. Everything hinges on Trump and Xi: whether they will urgently co-lead a proper global AI treaty-making process, starting with their April summit.

Means

We're executing a precision persuasion campaign aimed at those influencers with two components:

(a) an evolving 356-page Strategic Memo, a treasure trove of deeply researched "persuasion profiles" of each key potential influencer of Trump's AI policy (their interests, philosophies, psychology, and key AI predictions) and deep analysis and prescriptions for ultra-high-bandwidth treaty-making, globally-trusted treaty enforcement, and much more.

(b) a direct engagement campaign via periodic tours to expand our network of liaisons across DC, Bay Area, Rome/Vatican (and soon Mar-a-Lago, Singapore and the New Delhi AI Action Summit).

Targeted influencers include Vance, Pope Leo XIV, Suleyman, Bannon, Hassabis, Amodei, Altman, Gabbard, Rubio, Carlson, Rogan, Musk — and possibly Kratzios, Sacks and even Thiel.

As a result of our deep research, we envision a key aggregating role to be played by Vance and/or Pope Leo XIV, and proactive role for humanist AI lab leaders like Suleyman, Amodei, Altman, Hassabis and even Musk. We foresee a hub role for Rome/Vatican to converge influencers and then a key role for Singapore for hosting the treaty-making.

(See the Strategic Memo, 2025 Achievements and 2026 Roadmap).

Map of primary locations of targeted influencers:

Not Just Any AI Treaty-Making Process

A global AI treaty-making process is not guaranteed to lead to a serious treaty, do it on time, or guarantee that a treaty wouldn’t lead to a dystopia.

Depending on the nature of the treaty-making process, as outlined in the three-way fork framing above, it will lead to one of three outcomes:

A treaty is not attempted or fails to prevent ASI.

This leads to an AI takeover, resulting in an extremely uncertain flip-coin gamble leading to Near Human Extinction or an ASI-governed Human Utopia, whereby for some reason an ASI will care about our wellbeing and agency.A treaty inadvertently entrenches a durable global authoritarian oligarchy.

This leads to a Human Power Grab, dystopia led by a few humans through AI.A proper treaty prevents ASI and reduces concentration of power and wealth.

This leads to AI-Assisted Human Triumph, unleashing unimagined abundance, wellbeing and individual and collective freedoms.

Middle outcomes among those three scenarios are highly unlikely. Stopping AI progress altogether is virtually impossible, and undesirable. ASI- Hence, we must strive with all we have for AI-Assisted Human Triumph, in the greatest, riskiest, scariest and most exciting endeavor humans have ever faced.

2025 Achievements

With only $72,000 in seed grants, we built: a 350-page Strategic Arsenal with more actionable intelligence than any document we're aware of and established 85+ pathway contacts towards influencers in Bay Area and DC,a nd direct pathways to 2 of 10 influencers. Held two dinners for AI lab contacts in SF; two full-day field engagements at Anthropic and Open AI HQs; 23+ direct AI lab officials engagements; 15+ security think-tanks officials in DC.

(See 2025 Achievements)

2026 Roadmap

The window is now. Trump's anticipated meeting with Xi in late April 2026 creates a once-in-a-generation opportunity. Our targets: 150+ introducer engagements across the four hubs (Bay, DC, Rome, Mar-a-Lago), 15+ direct engagements with influencers or their key staff, 3+ substantive meetings with influencers themselves, 3+ Strategic Memo updates timed to the summits, and meetings in Rome to catalyze a humanist AI alliance for a US-China-led AI treaty. (See 2026 Roadmap)

How Feasible Is It?

Most AI and political experts believe it is just too late or impossible, or that Trump’s radical unilateralism will never lead to a proper, fair and effective AI treaty. Most in Silicon Valley believe it is bound to create a global surveillance dystopia. But they are wrong — and here's why:

1) Why China Calls for Global AI Governance Are Likely Genuine

Skeptics argue Beijing's calls for AI governance are pure strategic positioning — the follower slowing the race. But two years of consistent action suggest otherwise. Since Xi launched the Global AI Governance Initiative in October 2023, China has signed the Bletchley Declaration acknowledging AI's "unintended issues of control," proposed WAICO with a 13-point action plan, and agreed with Biden that humans — not AI — must control nuclear weapons. Vice-Premier Ding Xuexiang warned at Davos 2025 that unregulated AI is a "grey rhino" — a visible but ignored catastrophe. Unlike nations whose governance consists of declarations, China has implemented binding regulations: pre-deployment safety assessments, watermark mandates. Strategic benefit and genuine concern aren't mutually exclusive. The evidence suggests Beijing would genuinely engage in a serious treaty — if the United States offered credible partnership.

(See : Is China's Call for Global AI Governance Strategic or Genuine?)

2) AI Concerns of US and Republican Voters

Trump voters’ are terrified of AI, and ever more so, and call for a strong AI Treaty - much as they did in 1946 in the months leading to the Baruch Plan. Back in 2023, 55% of citizens surveyed in 12 countries were already fairly or very worried about "loss of control over AI". By 2025, 78% of US Republican voters believed artificial intelligence could "eventually pose a threat to the existence of humanity". By March 2025, Americans very or somewhat concerned about AI “causing the end of the human race” were 37% and increased to 43% by July 2025. In October 2025, 63% of US voters believe it's likely that "humans won't be able to control it anymore", and 53% of US voters believe it's somewhat or very likely that "AI will destroy humanity". Not only that, but 77% of all US voters support a strong international AI treaty.

It is still not on top of citizens agenda but that is bound to change suddenly as ever more revelations, accidents, capability progress, and public figure warnings will emerge.

3) Trump’s Psychology

Most believe that Trump’s raw and unilateral approach to foreign policy, and his style and psychology, makes it impossible for him to lead history’s boldest treaty.

On the contrary, Trump's utterly hyper non-ideological, political realism and pragmatism, his historically low ratings and need for a "big win," his voters’ deep and increasing AI concerns, his instinct for self-preservation and survival, his aversion to extremely-weak multilateral institutions, his penchant for big deals and unpredictable pivots, deep AI concerns of some of his close advisors, and even is deep unreliability — all point to his persuasibility to ink a Deal of the Century on AI. A deal based on a trustless “trust or verify” approach rather than a weak and short-lived “trust but verify” treaty of the Reagan-Gorbachev. A deal to recognizes how current international institutions and treaties and law are extremely-weak: a set of post-colonial thin veils of “international niceties” acting as makeup for a world governed by hard and soft raw power of half adozen nations.

(See post 22 reasons why Trump can be persuaded)

4) The Precedent of the 1946 Baruch Plan

Presented on June 14th 1946 by President Truman to the United Nations - barely one hour after the birth of Donald Trump - the Baruch Plan sought to create a new UN agency to bring all dangerous research, arsenals, facilities, and supply chains for nuclear weapons and energy under exclusive veto-free international control. It was meant to be extended to all other globally dangerous technologies and weapons. It was and still is by far the most ambitious treaty proposal in the history of mankind. Approved by 10 out of 11 members of the UN Security Council, it nearly succeeded. Some of the most influential AI experts and leaders have called for the Baruch Plan as a model for AI governance, including Yoshua Bengio, the most cited AI scientist, Ian Hogarth, (UK AI Safety Institute), Jack Clark, (Anthropic), Jaan Tallinn, (Future of Life Institute).

(See Strategic Memo v2.6)

5) A Treaty-Making that Can Succeed while Avoiding Global Authoritarianism

Catalyzed by public concern and persuasion by a few key advisors, and a pragmatic US president, the parallels with today’s predicament with Artificial Intelligence are astounding. Trump has a chance to succeed where Truman failed, and build an unparalleled legacy, by advancing a Baruch Plan for AI: The Deal of the Century. The Baruch Plan nearly succeeded, and the key reasons for its failure—lack of a proportionate diplomatic bandwidth, a fitting treaty-making model and mutually-trusted treaty-enforcement mechanisms—are today easily addressable via a US-China-led wartime-paced “global Apollo program” for mutually-trusted diplomatic and enforcement systems. (See Strategic Memo v2.6)

6) Trump’s AI Influencers’ Persuadability

After eleven months of in-depth analysis of key potential influencers of Trump's AI policy analysis, we've discovered something counterintuitive: most are motivated more by philosophy, values, and legacy than by wealth or power per-se. A shift in even a few of 8 key AI predictions of some influencers could cascade into an informal alliance of influencers that sways Trump. (See Strategic Memo v2.6)

A Unique Opportunity

With the emerging political window, the constraint is no longer strategy or positioning—it's operational capacity: 2-3 dedicated hires can easily transform our arsenal and open pathways into a highly-tailored, high-bandwidth, targeted persuasion campaign. Our 350-page treasure trove of tailored intelligence, and our extreme capital efficiency (~$7,500/ month,) position us to 50x our impact in months with moderate funding.

Funding Needs

With only $75,000, we were able to activate 2,100 hours of professional pro-bono work and achieve astounding achievements in 2025. We are now seeking $100,000–$400,000 to move to the next stage (and an urgent $10–30k bridge funding). Every dollar goes to the mission—no fancy offices, no high staff costs. We operate at ~$7,500/month, a fraction of typical DC policy NGO. (See Donate or Funding So Far)

Ways You Can Help

We need: Introductions to the influencers profiled in our Memo or their close advisors; Funding to move to the next stage or maintain operations; Contributors for the Memo with expertise in AI policy, diplomacy, or access to target networks.